Tasks

Preview mode: progress is saved locally and not synced to a room.

Install the Codex CLI locally before you dive into the workshop.

- Choose an install method:

- Homebrew:

brew install --cask codex - npm:

npm i -g @openai/codex - Manual download: grab the latest binary from https://github.com/openai/codex/releases and add it to your PATH.

- Homebrew:

- Choose an install method:

Codex on Windows is still early; follow the official guide at https://developers.openai.com/codex/windows.

- Native Windows (quick start)

- Install the Codex CLI and run it from PowerShell.

- Agent mode works natively and uses an experimental sandbox to restrict files/network. It can’t block writes in folders where Everyone already has write access.

- WSL2 (recommended)

- Follow the documentation from the docs.

- Native Windows (quick start)

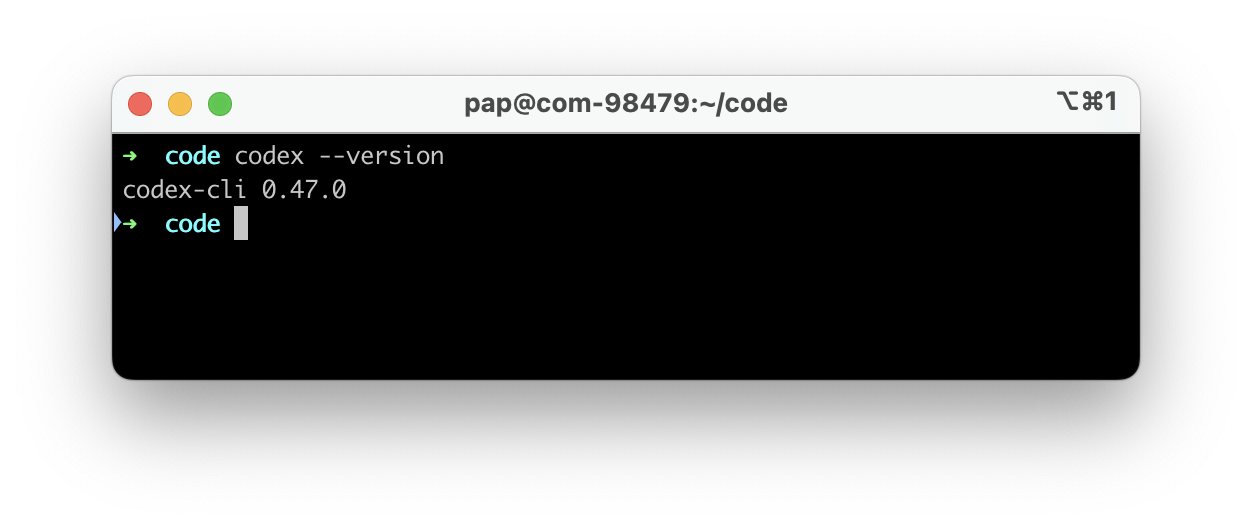

Confirm you are on the expected release before continuing.

- Run

codex --version; expect at leastcodex-cli 0.53.0(baseline when this workshop was written—update if you're behind).

- Run

Make sure Codex is signed in with the same ChatGPT account you use on the web.

- Run

codex login. - Your browser opens to complete sign-in; approve and return to the terminal once it confirms success.

- Run

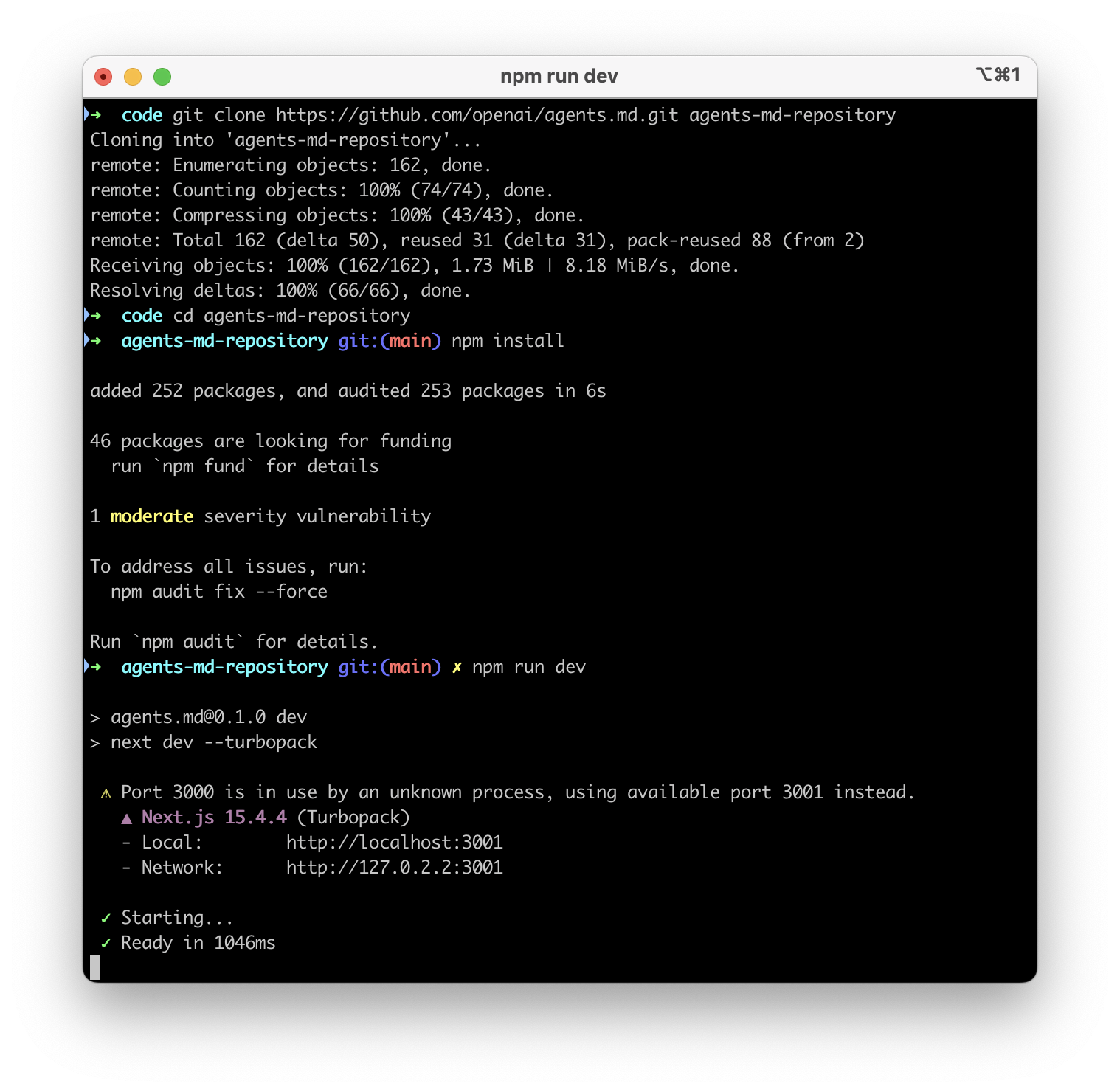

Pull down the sample repository you'll use throughout the exercises.

- Clone the repo with

git clone https://github.com/openai/agents.md.git agents-md-repository(or download the ZIP from https://github.com/openai/agents.md and rename the folder toagents-md-repository). - Open a separate terminal for the dev server and launch it:

cd agents-md-repository npm install npm run dev - Leave that terminal running; you'll use a different shell window for Codex commands.

- Clone the repo with

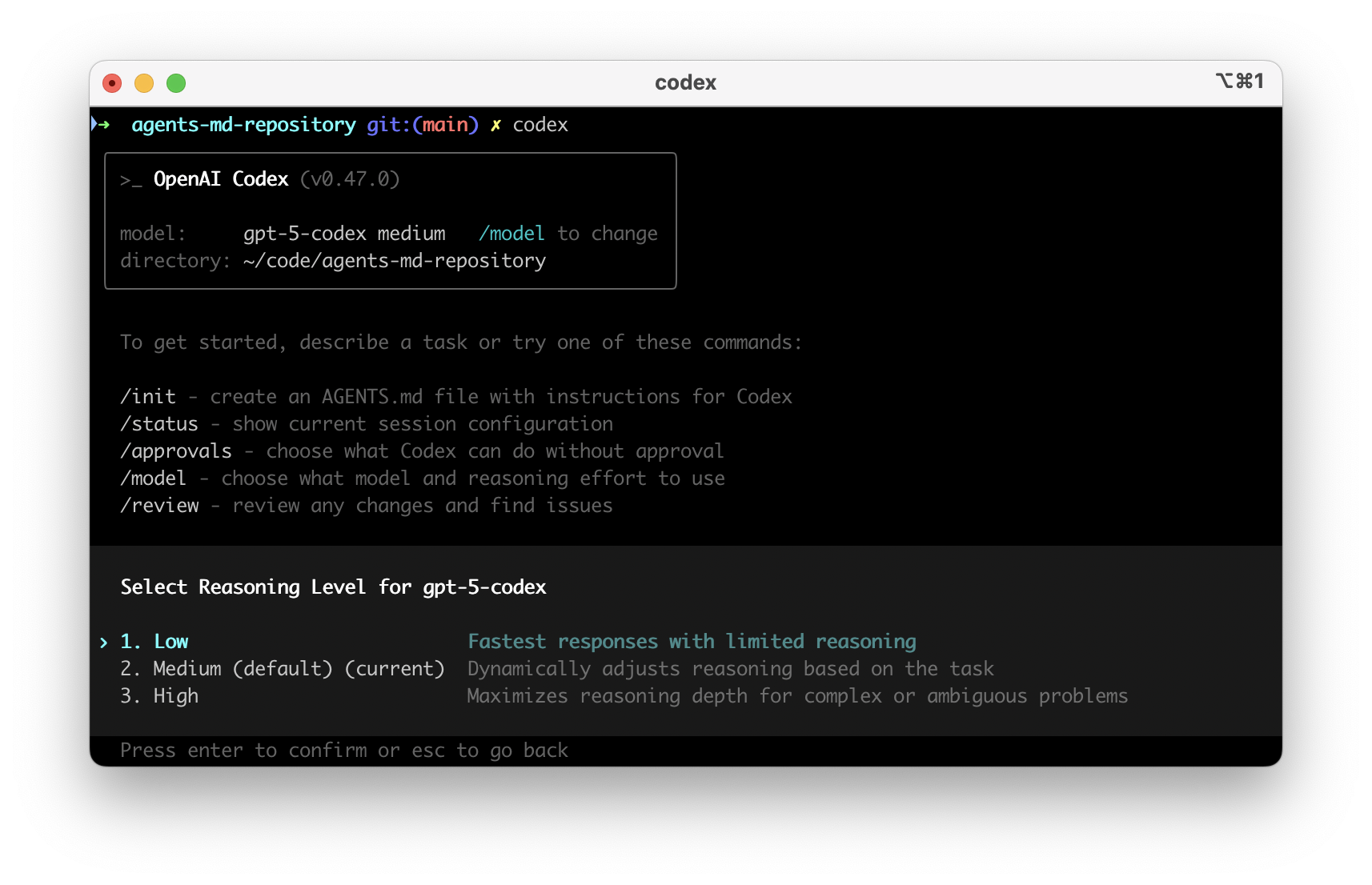

Tune Codex to the low reasoning before prompting. When you finish testing.

- Run

codex, type/model, highlight gpt-5.1-codex-max, press Enter, then select the Low reasoning.

- Run

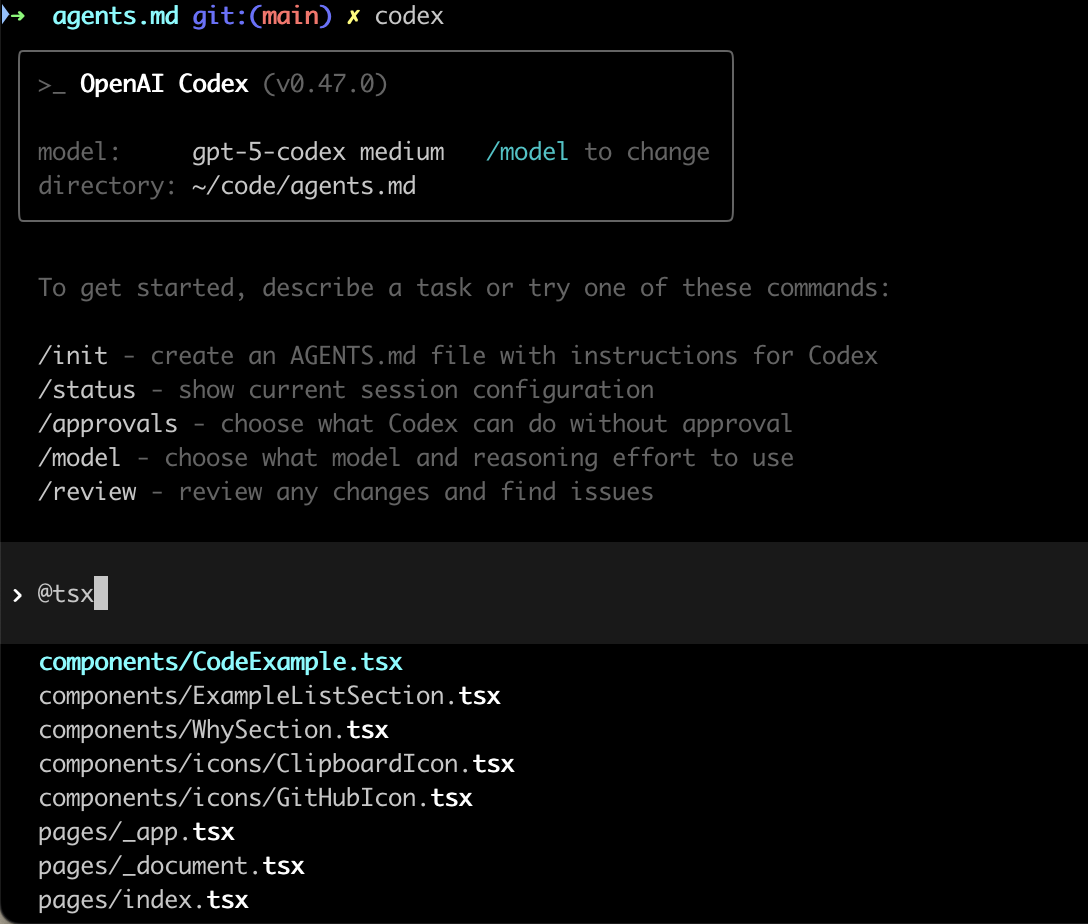

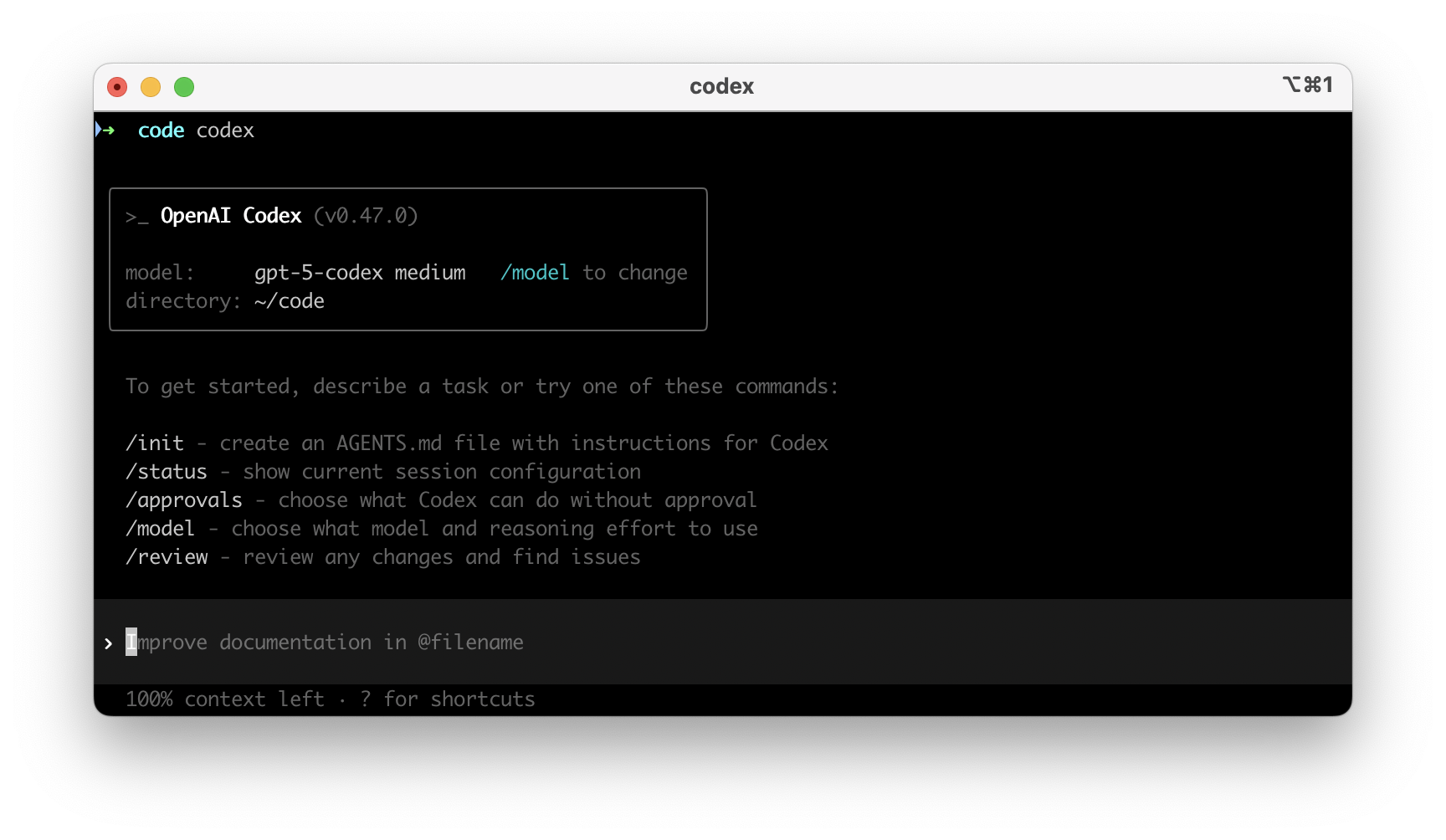

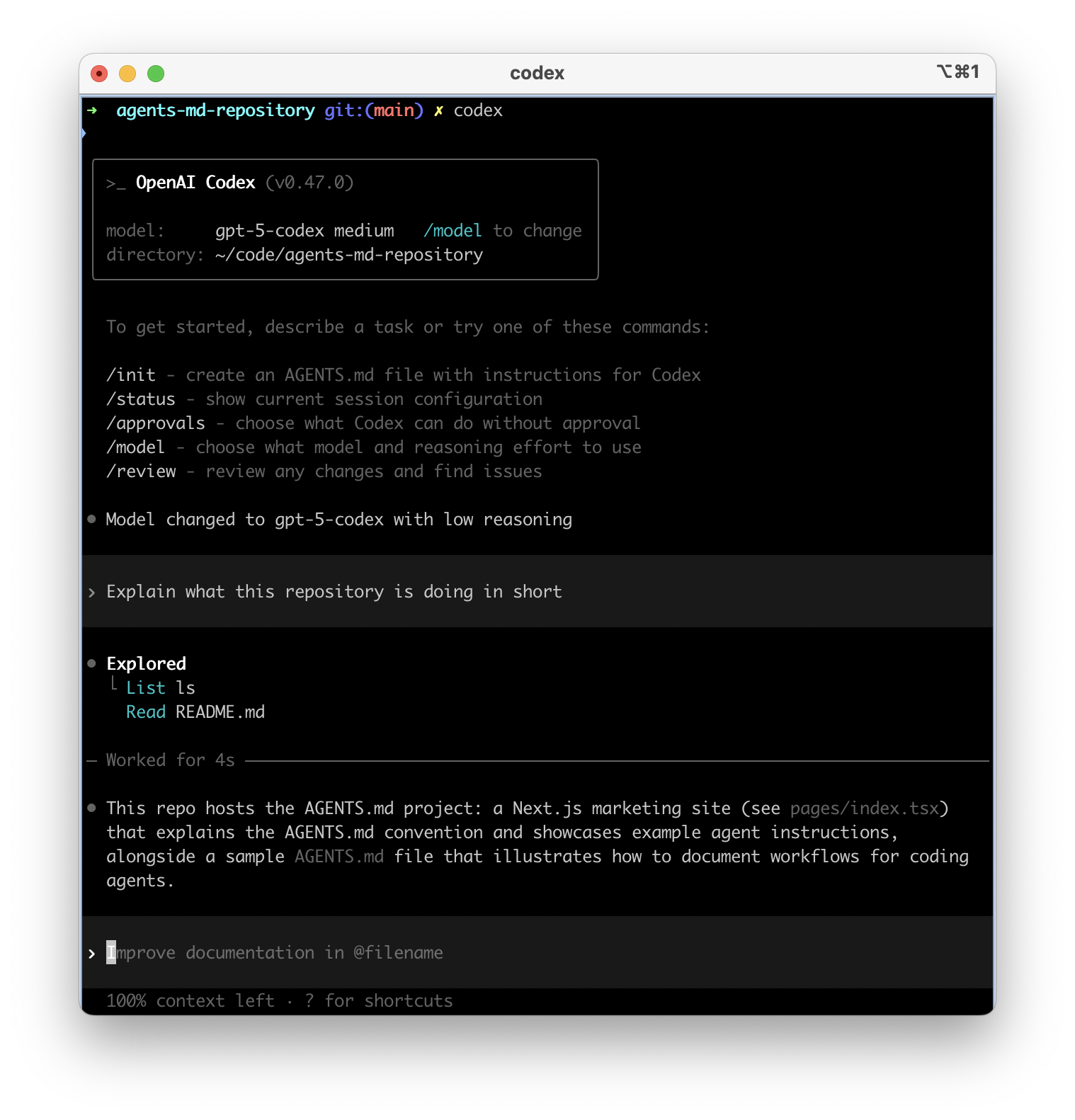

Practice asking Codex for analysis without editing files.

- With the low reasoning model active, ask:

Explain what this repository is doing in short.

- With the low reasoning model active, ask:

Return to the recommended default model before continuing.

- Start or resume a session, run

/model, and pick gpt-5.1-codex-max, then confirm Medium reasoning—best blend of depth and quality. - Keep this as the default before moving on so follow-up tasks use the best model.

- Start or resume a session, run

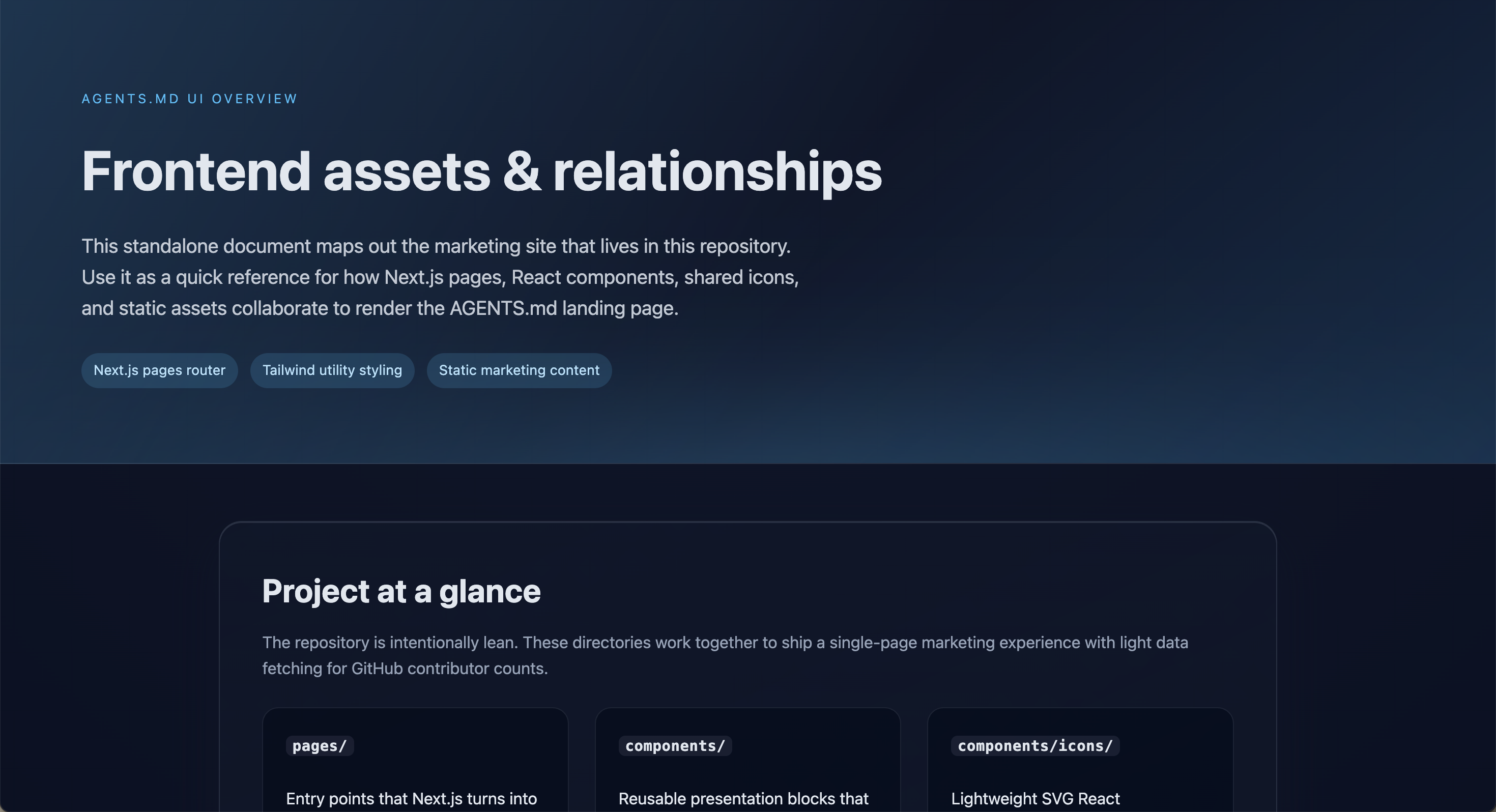

Run the following prompt to generate a visualization asset for the repo.

- Start a Codex session.

- Send:

Create a small HTML page in assets.html that shows how files are related to each others. I want a nice looking assets.html that explain those concepts. - Review the proposed asset to ensure the relationships are accurate before applying the patch.

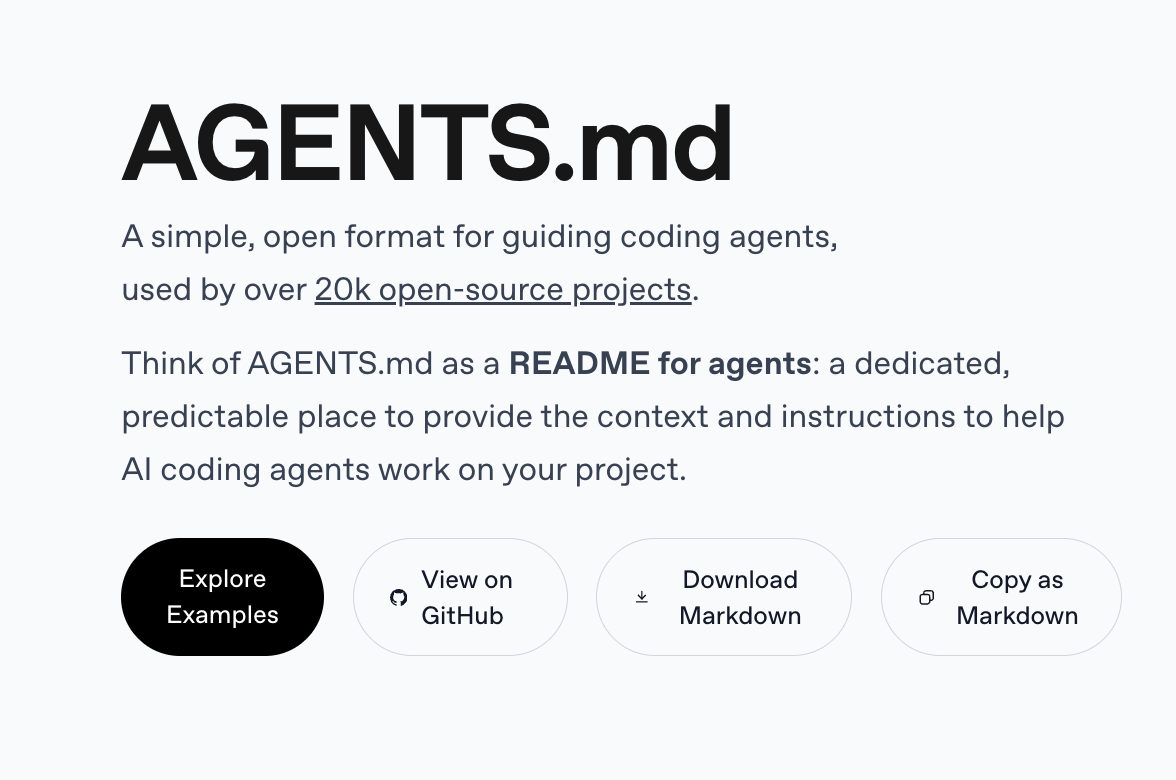

Have Codex modify the project by applying a patch.

- Start a new task and send:

Can you implement, next to the "Explore Examples" and "View on Github" buttons a button to download and another one to copy the page as markdown? - Review the diff Codex proposes before applying.

- Start a new task and send:

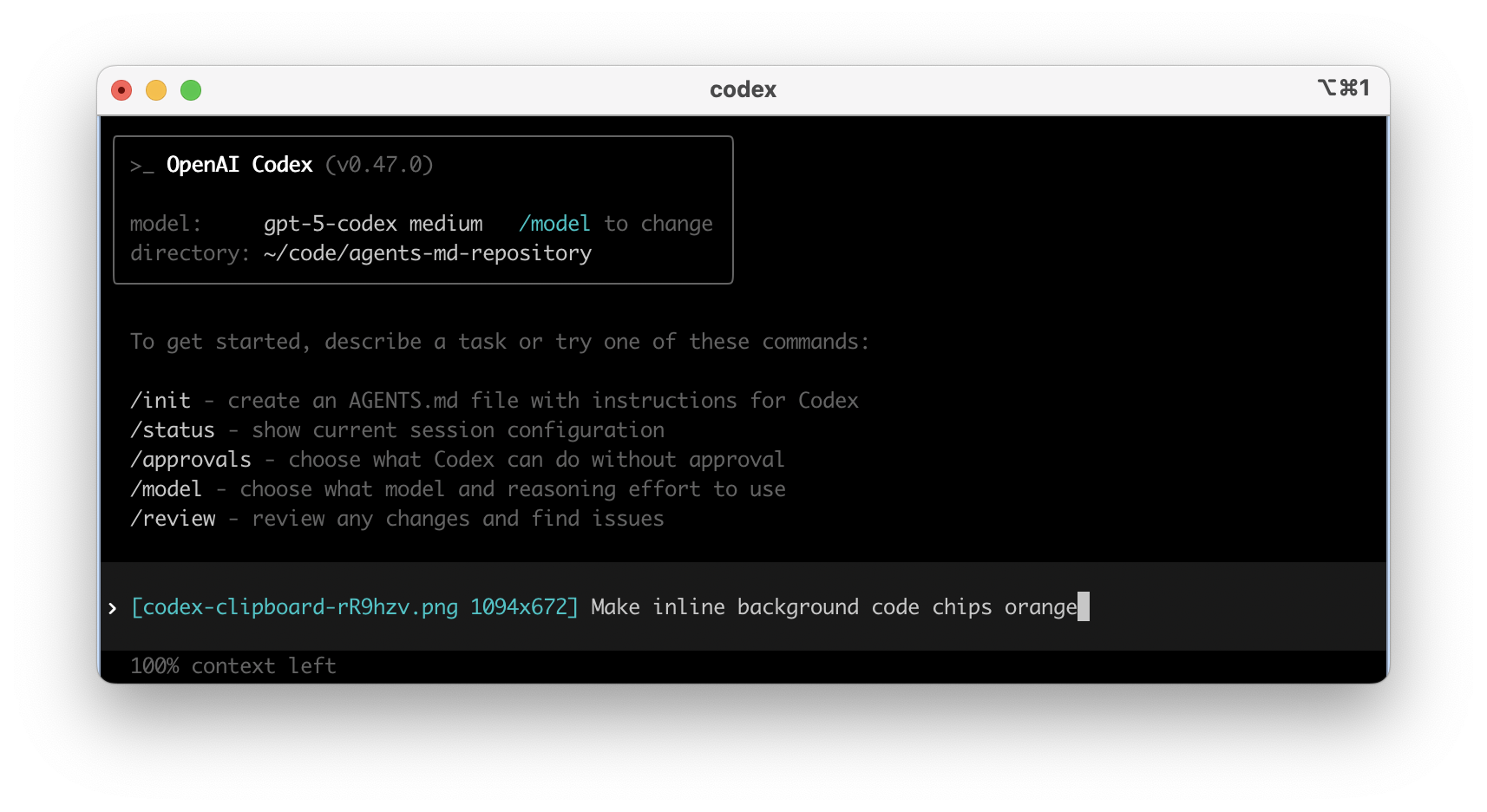

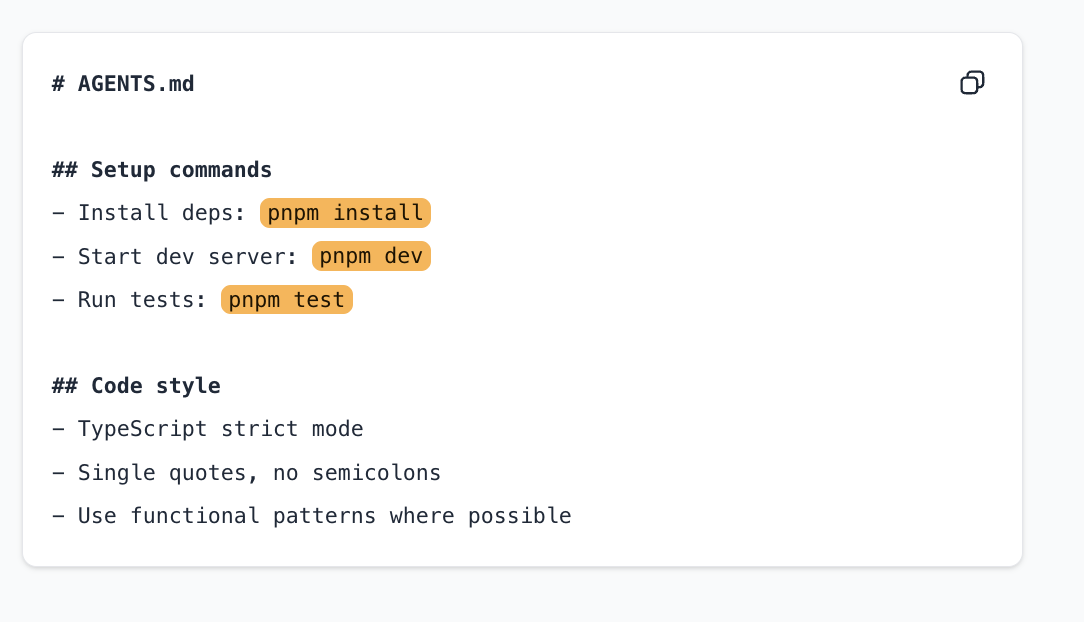

Practice attaching visuals and following up with styling tweaks.

- Capture a screenshot of the Agents.md textarea (top-right of the app).

- Paste the image directly (ctrl+v on Mac or ctrl+maj+v on Windows) or reference it with

@agents-textarea.png, then add:Make inline background code chips orange. - Verify Codex updates the UI styling based on the screenshot.

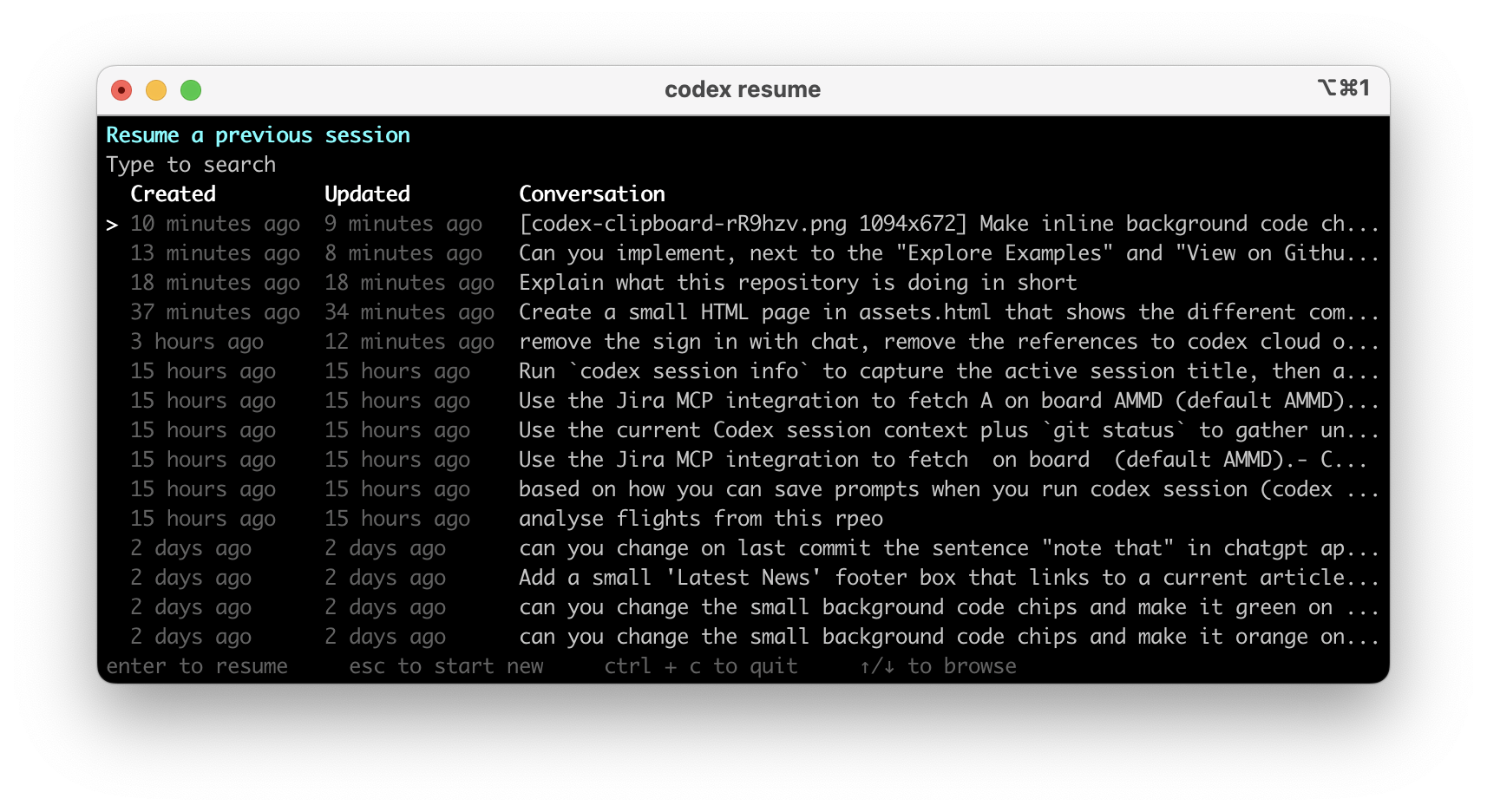

Learn how to jump back into an existing thread.

- Run

codex resume. - Search for the task titled “chip code/background orange”.

- Reopen it and ask, “actually make that green”.

- Confirm Codex replays the context and updates the color.

Note: you can run

codex resume <session_id>directly at the end of a session, as shown when you exit the CLI.

- Run

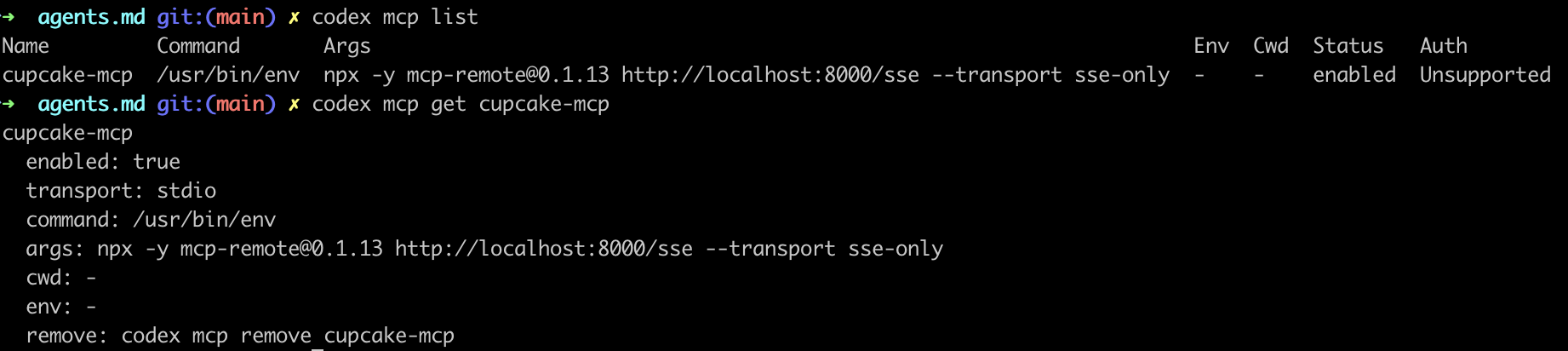

Use the Cupcake MCP sample so you can pull product requirements on demand.

Review the Model Context Protocol overview: https://developers.openai.com/codex/mcp. The Cupcake MCP server is hosted at

https://codex-102.vercel.app/mcp.- Register the server:

codex mcp add cupcakemcp --url https://codex-102.vercel.app/mcp - Verify registration:

You should see thecodex mcp list codex mcp get cupcake-mcpcupcakemcpentry in both commands. - Spot-check the list output for the expected entry.

- Register the server:

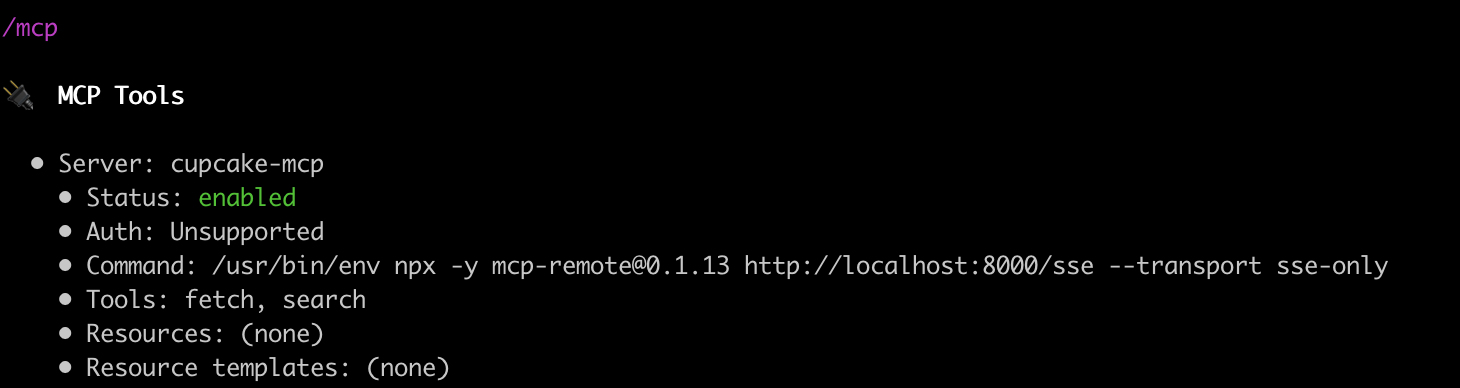

Verify Codex can reach Cupcake through the MCP server before you rely on it.

- In a Codex chat, run

/mcpto confirm the server connection is healthy. - If it fails, re-check the

config.tomlentry or the CLI flags from https://developers.openai.com/codex/mcp.

- In a Codex chat, run

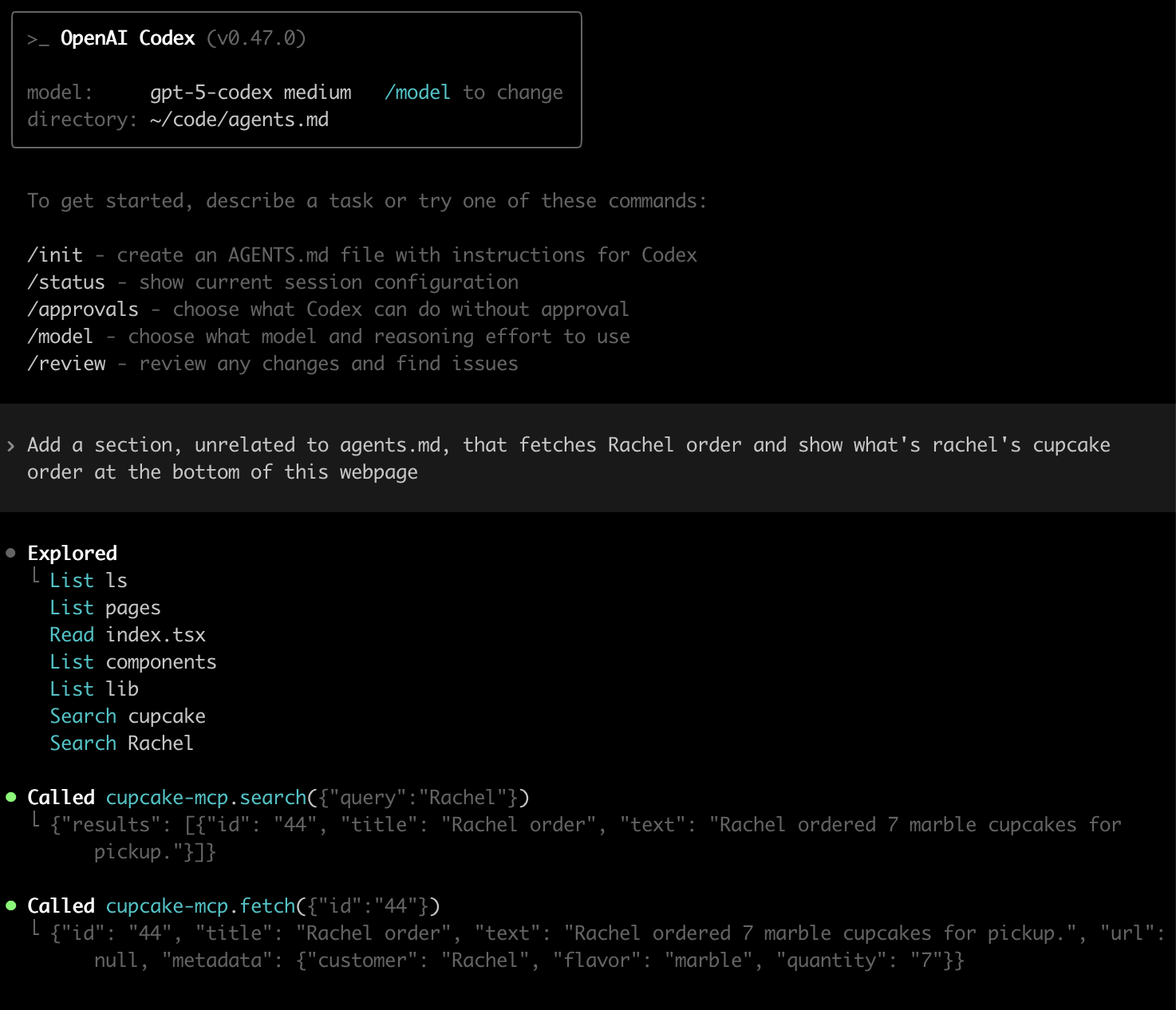

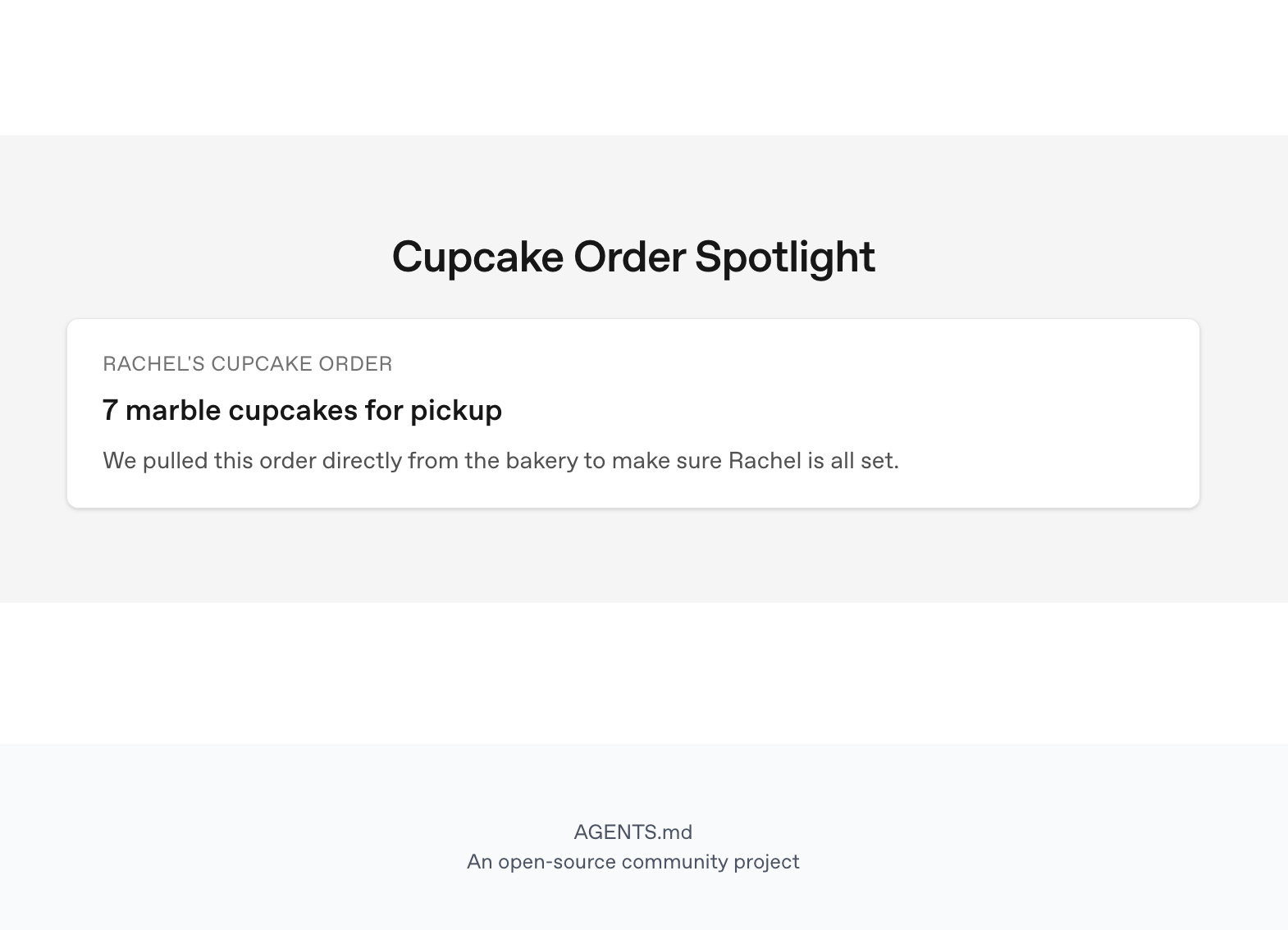

Test the MCP integration by pulling product requirements before coding.

- Start a fresh session: run

codexand create a new chat. - Send:

Add a section, unrelated to agents.md, that fetches Rachel order and show what's rachel's cupcake order at the bottom of this webpage - Let Codex fetch the Cupcake records via MCP (Rachel order is at records.json#L260).

- Start a fresh session: run

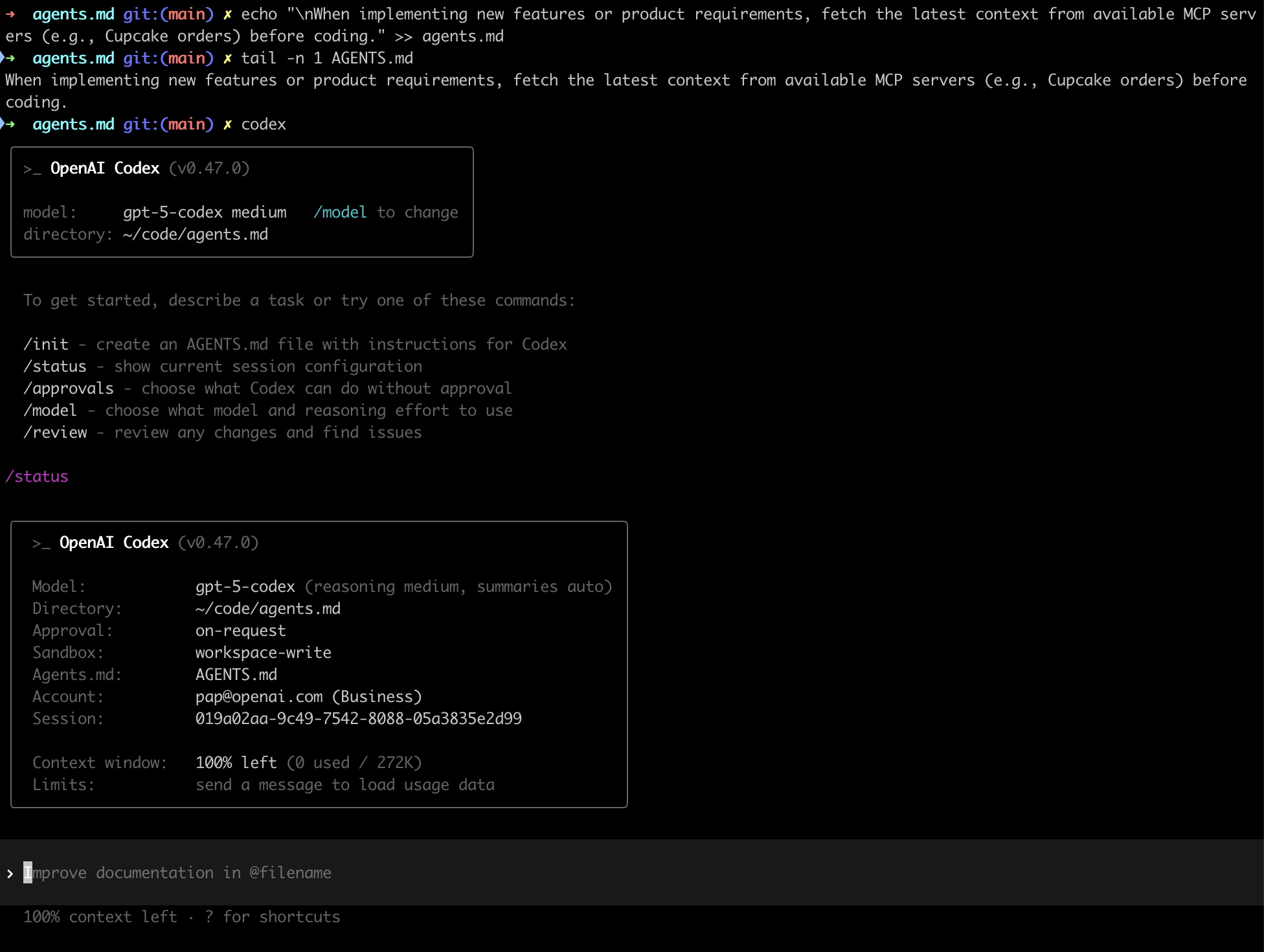

Make Codex adhere to our standard leveraging AGENTS.md.

Codex will follow the prompt in this file and add it in its context.

- Append the note:

echo "When implementing new features or product requirements, fetch the latest context from available MCP servers (e.g. Cupcake orders) before coding." >> agents.md - Save the changes.

- Append the note:

Make the new guidance available in every project.

- Move the appended content into

~/.codex/agents.md(create the directory if it doesn't exist). - Clean up the project copy so only the global file keeps the note.

- Start a new Codex chat and rerun the prompt

In which context would you use MCPs we have access to?to confirm the global guidance sticks.

- Move the appended content into

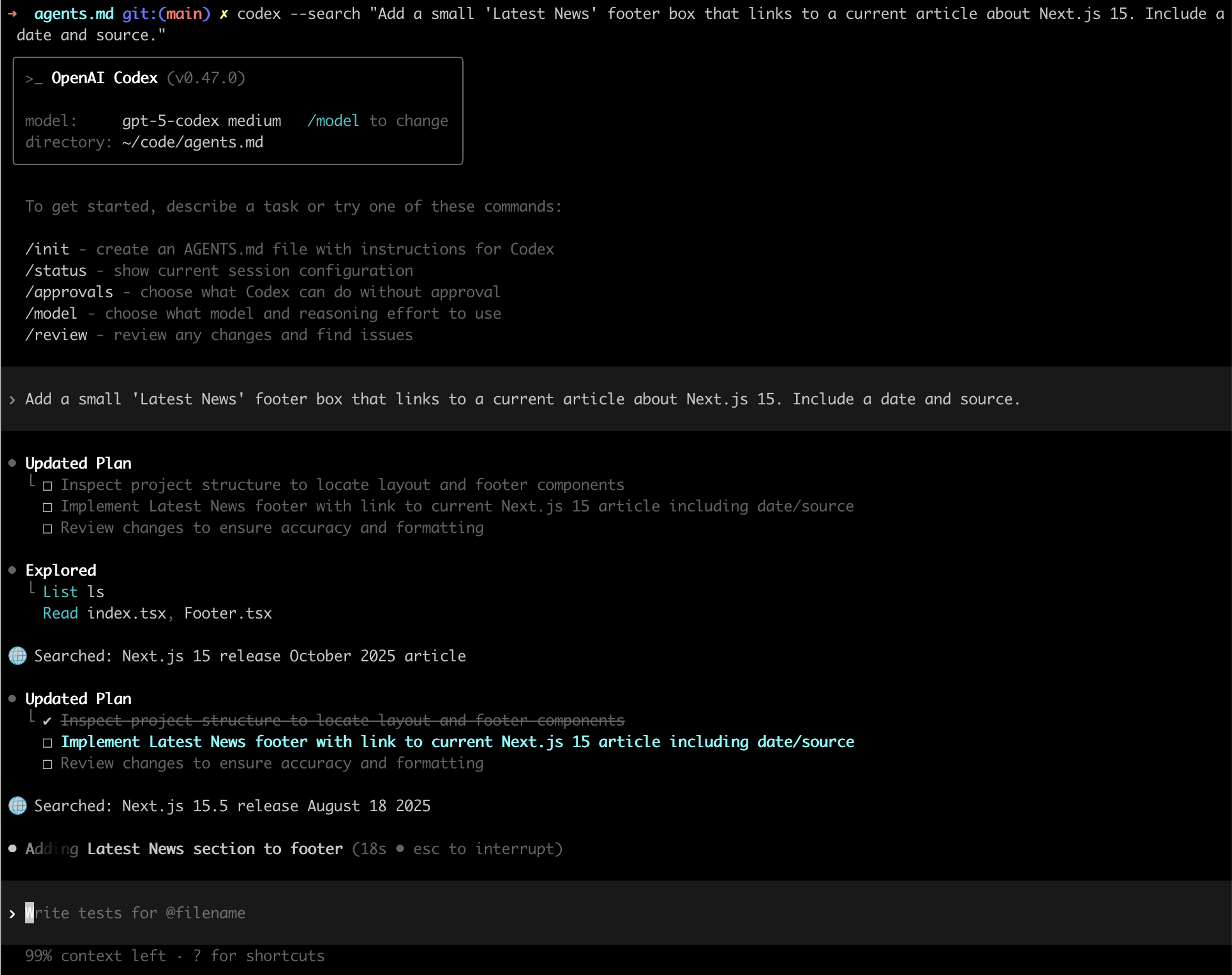

Use search to jump back to prior work.

- Run:

codex --search "Add a small 'Latest News' footer box that links to a current article about Next.js 15. Include a date and source."

- Pick the matching task from the results and reopen it if you need the history.

- Run:

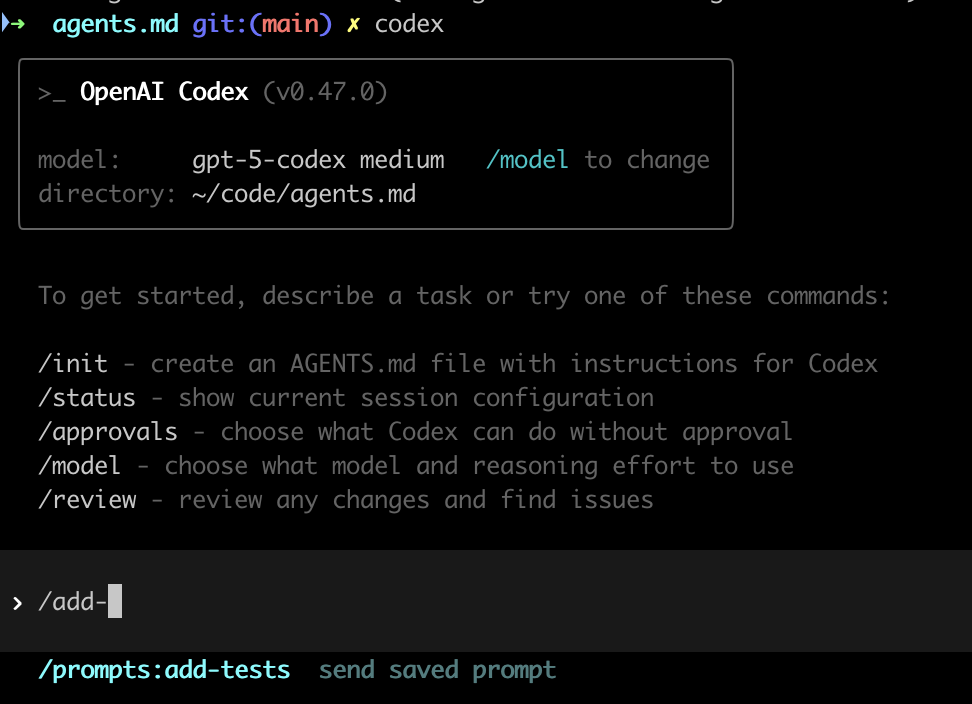

Automate test generation prompts for future sessions.

- Save your standard ask:

- Create a directory at

~/.codex/promptsto store saved commands (make it if it does not exist). - Create

~/.codex/prompts/add-tests.mdcontaining:Generate unit tests for the touched files. Use the project’s existing test runner and conventions. Keep diffs minimal and runnable locally.

- Create a directory at

- Resume the “Latest News” task (use

codex resumeand type to search) and run/add-teststo invoke the new command.

- Save your standard ask:

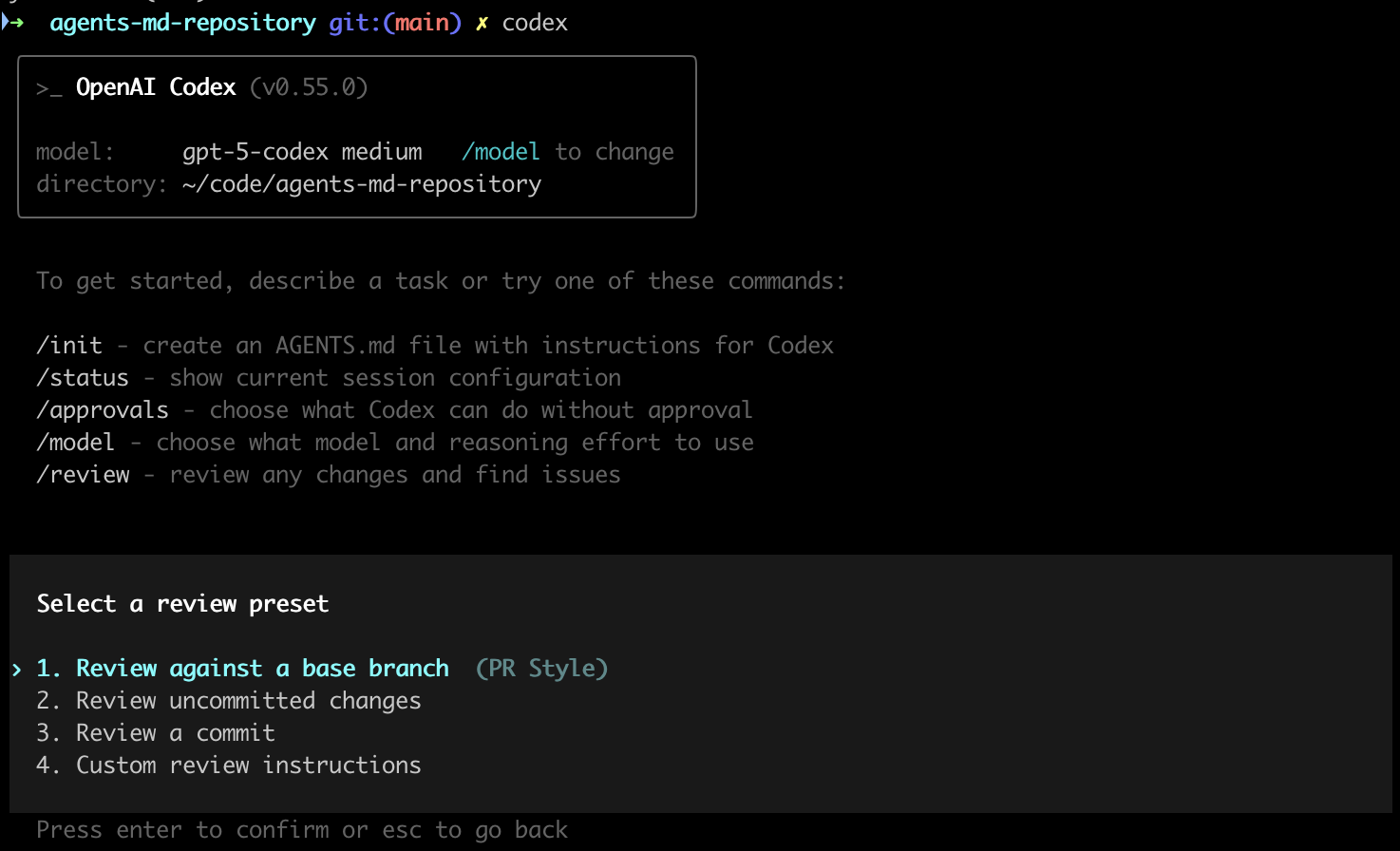

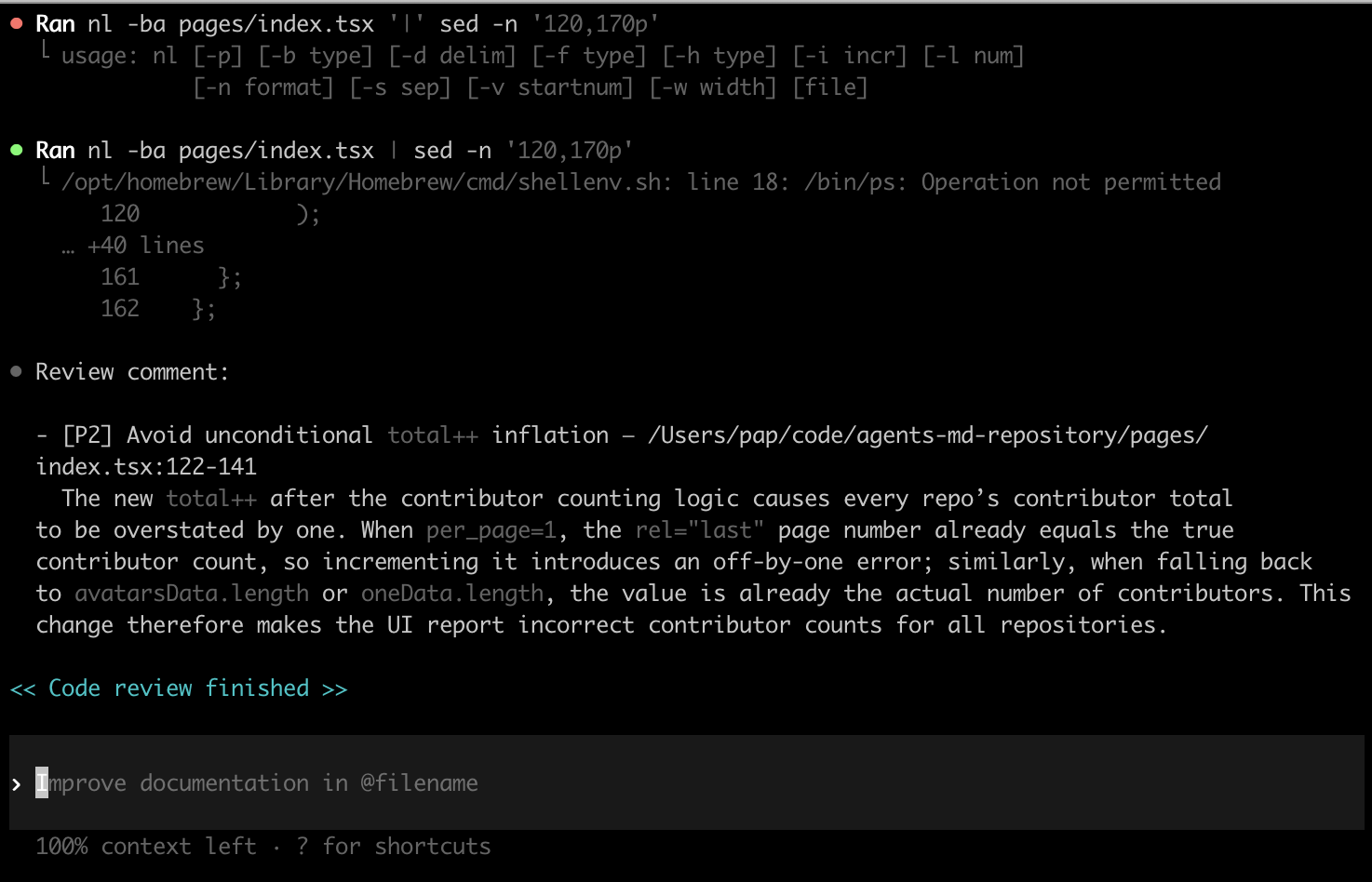

Use

/reviewto inspect a small regression you introduce on purpose.- In your repo, open

pages/index.tsxand scroll to thegetStaticPropsloop that buildscontributorsByRepo. Inside that loop, right before thecontributorsByRepo[fullName] = { avatars, total }assignment, add one line:

Leave everything else unchanged so the counter quietly creeps up by one.total++; - Start a fresh Codex CLI session in the repo and run:

Choose Review against a base branch and select at/reviewmain.

- Let Codex run the review and read the findings that flag the inflated total.

- In your repo, open

Close out by skimming the official guidance.

- Read https://developers.openai.com/codex/prompting/ for Codex-specific prompting tips.

- Be precise, avoid conflicting instructions, and match reasoning effort to task complexity (use

<self_reflection>when helpful). - Use XML-like sectioning for multi-part asks and keep language cooperative.

- Remember layered

AGENTS.mdfiles:- Global (~/.codex/AGENTS.md) for personal defaults or safety constraints.

- Project (AGENTS.md) for repo-specific guidance.

- Directory (AGENTS.md) for local overrides.

- Capture any personal defaults you want in your own

AGENTS.mdfiles.

Bookmark the go-to references for deeper Codex work.

- Open the product hub at https://developers.openai.com/codex for docs, demos, and release notes.

- Explore the Codex SDK: https://developers.openai.com/codex/sdk.

- Review the GitHub Action wiring guide: https://developers.openai.com/codex/sdk#github-action.

- Track updates via the changelog: https://developers.openai.com/codex/changelog.